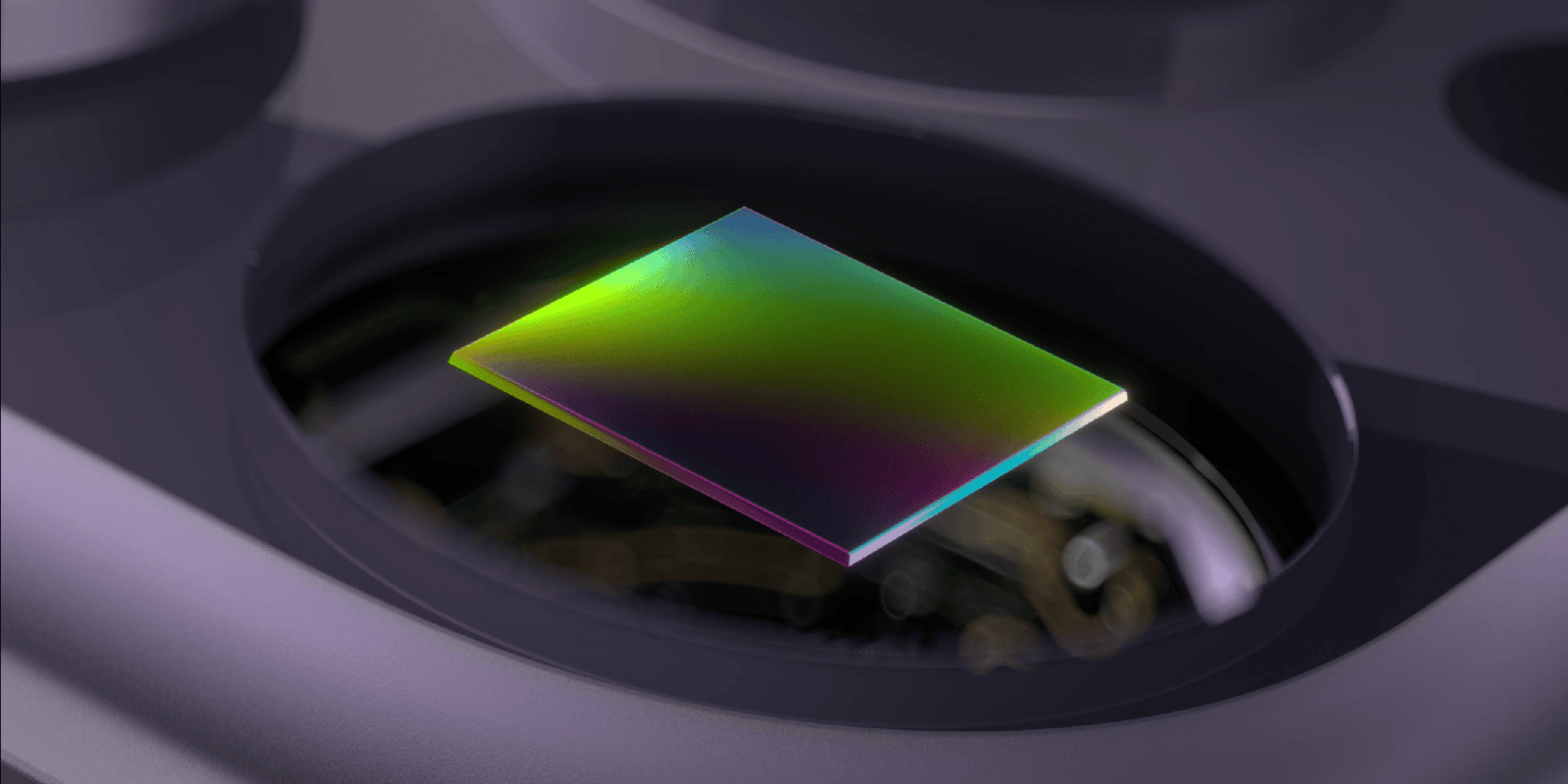

Apple has unveiled an innovative AI model designed to significantly enhance low-light photography on iPhones. This model, known as DarkDiff, integrates directly into the camera’s image processing pipeline, allowing it to recover lost detail from very dark images. Researchers from Apple collaborated with Purdue University to tackle the persistent issue of grainy, noisy photos taken in low-light conditions.

Addressing Low-Light Photography Challenges

Many smartphone users have experienced the frustration of capturing photos in dimly lit environments, often resulting in images marred by digital noise. This occurs when the camera’s image sensor fails to gather sufficient light, prompting manufacturers like Apple to develop various image processing algorithms. Unfortunately, these methods have faced criticism for producing overly smooth images that sometimes lose fine details or alter the original content beyond recognition.

The introduction of DarkDiff aims to rectify these shortcomings. According to the study titled “DarkDiff: Advancing Low-Light Raw Enhancement by Retasking Diffusion Models for Camera ISP,” the model employs a unique mechanism that computes attention over localized image patches. This technique helps to preserve local structures within the image and reduces the tendency for the AI to generate misleading modifications, a common issue in traditional enhancement methods.

How DarkDiff Works

DarkDiff operates by first processing the raw sensor data through the camera’s image signal processor (ISP), which handles essential tasks such as white balance and demosaicing. Once this initial processing is complete, DarkDiff works on the resulting linear RGB image, effectively denoising it and producing the final sRGB output. A key component of DarkDiff is its use of a diffusion technique known as classifier-free guidance, which balances adherence to the input image and the model’s learned visual priors.

When the guidance is set lower, the model generates smoother patterns, while higher guidance levels promote sharper textures and finer details. This balance is crucial, as it also increases the risk of introducing unwanted artifacts or hallucinations in the final image.

To validate the effectiveness of DarkDiff, researchers conducted tests using actual low-light photographs captured with high-end cameras such as the Sony A7SII. The images were taken at night with exposure times as short as 0.033 seconds, and the results were compared against reference photos taken with a tripod over an exposure period 300 times longer. The comparisons highlighted the significant improvements offered by DarkDiff in low-light conditions.

Limitations and Future Prospects

While DarkDiff presents notable advancements, the researchers acknowledge some limitations. The AI-based processing is considerably slower than traditional methods, which may necessitate cloud processing to manage the computational demands. Running such intensive operations locally could quickly deplete a smartphone’s battery, raising concerns over practicality for everyday users.

Additionally, the study points out challenges with recognizing non-English text in low-light scenes. It is important to emphasize that, at this stage, there is no indication that DarkDiff will be integrated into iPhones in the near future. Nonetheless, the research underscores Apple’s ongoing commitment to pushing the boundaries of computational photography.

As smartphone users increasingly demand superior camera capabilities, the development of technologies like DarkDiff reflects a broader trend in the industry. Companies strive to deliver enhanced photographic experiences that transcend the limitations of physical hardware.

For further details and to view additional comparisons between DarkDiff and other denoising methods, access the full study through the relevant academic channels.